Australia has now entered a new phase of digital regulation. From 10 December 2025, the country became the first in the world to enforce a nationwide ban preventing anyone under 16 from holding an account on major social media platforms. The move has sparked global debate, with parents, child-safety researchers and the tech industry divided over its implications.

At the centre of the policy is a simple but disruptive requirement: social media companies must verify the age of every user in Australia, accurately, repeatedly and at scale. This marks a decisive shift away from self-reported birthdays toward a more technical, and in some cases intrusive, system of digital age assurance.

For families, it raises new questions about privacy, childhood autonomy, and whether regulation can genuinely protect young people from the pressures and risks of online life. This explainer breaks down how the ban works, what it means in practice, and why age verification has become the new battleground in global tech policy.

When Did the Under-16 Social Media Ban Begin?

The ban came into effect on 10 December 2025. From that date, platforms classified as social media services must identify and remove any user under 16, and block new accounts created by children. The law is enforceable, not advisory: companies face penalties of up to $49.5m if the eSafety Commissioner decides they have not taken “reasonable steps” to keep underage users out.

The rule applies to major global platforms including Facebook, Instagram, TikTok, Snapchat, YouTube, X, Reddit, Twitch and Kick. Threads is also included because it requires an Instagram profile.

The list is not fixed. If large numbers of children migrate to smaller or emerging platforms, the government has the authority to expand the scope of the ban.

Which Apps Are Not Covered?

A number of online platforms are excluded, including Roblox, Pinterest, YouTube Kids, Discord, WhatsApp, GitHub, Steam, Google Classroom, Messenger and LinkedIn.

Some are considered educational or low-risk. Others, such as Roblox and Discord, raise questions of their own, but are not classified as “social media” for the purposes of the legislation.

How Does Age Verification Work Under the Australian Model?

This is the most challenging part of the new framework. Australia’s age verification rule requires platforms to deploy a mixture of technological methods—not just an ID upload.

The government has specified that ID alone must not be the only way to prove age, due to privacy risks. Instead, platforms are expected to use “multi-layered” age assurance systems. These typically include:

AI facial age estimation

Services such as Yoti and K-ID analyse a short, anonymous selfie video to estimate a user’s age, without storing biometric data. Instagram, TikTok and Snapchat have adopted this approach.

Behavioural age signals

Platforms examine how users type, scroll and interact online. Patterns associated with younger teens trigger further checks.

Birthdate audits

Accounts with inconsistent date-of-birth data are flagged automatically.

Device and account signals

History, device usage and social graphs can indicate whether an account is likely to belong to a child.

Human review

In borderline cases, human moderators make a final decision.

This combination of automated systems and manual decisions is unprecedented. It is also the first time that such age verification methods have been mandated nationally.

What Happens to Teen Accounts Now?

Platforms must give users under 16 a choice before their accounts are restricted. Most will offer:

- The option to download every photo, message and piece of posted content

- A “frozen” or deactivated state until the user turns 16

- The ability to delete the account entirely

- A pathway to reactivate the account on their 16th birthday

TikTok and Snapchat have stated that content will not be deleted unless the user requests it.

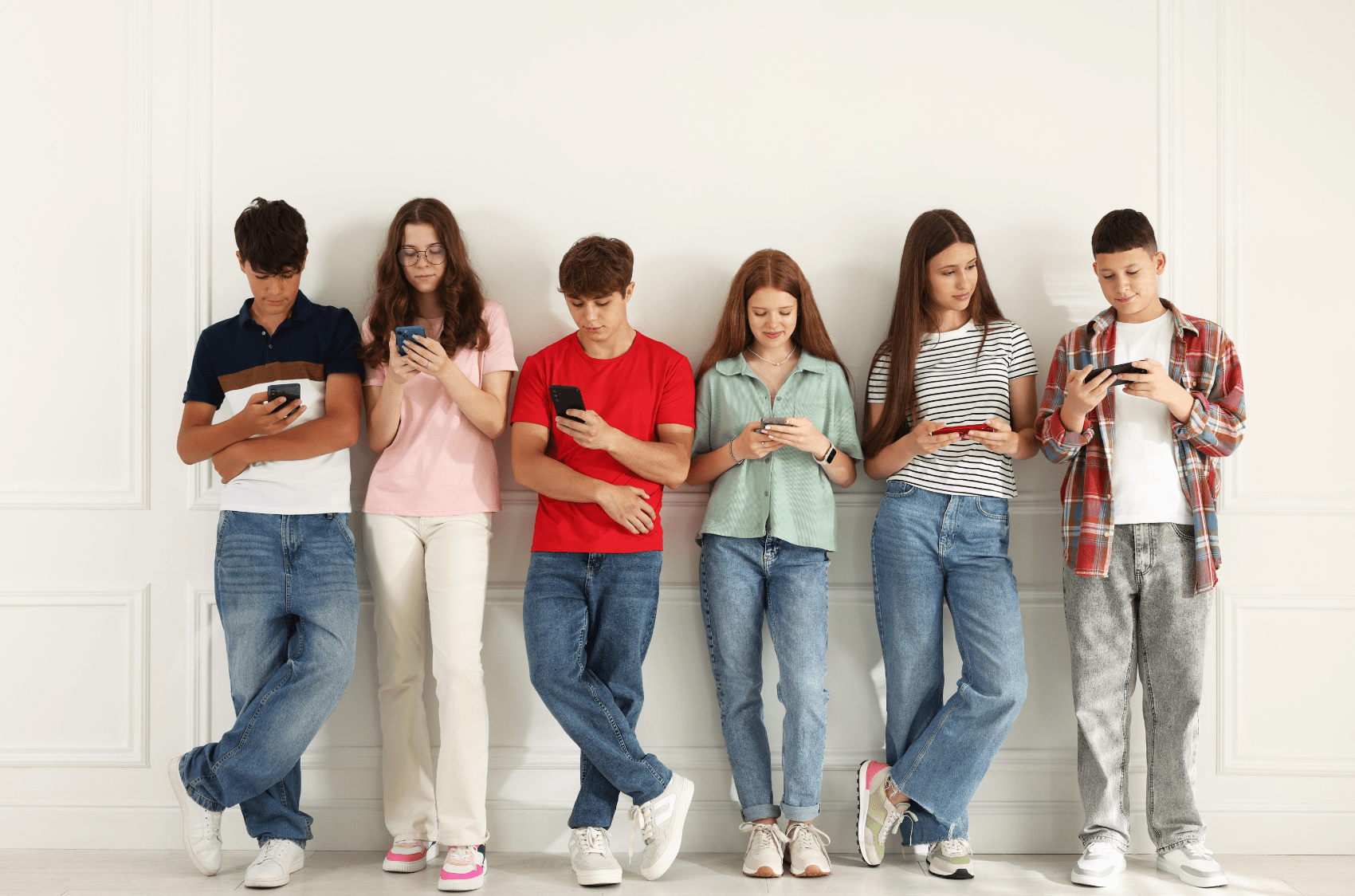

This affects hundreds of thousands of young Australians. Snapchat estimated that around 440,000 of its users aged 13 to 15 would be removed or suspended.

What If a Young Person Is Wrongly Classified as Under 16?

Errors are expected, particularly for users who appear younger than their age or whose behaviour is similar to that of younger teens.

Appeal routes generally include:

- A video-selfie age estimate

- An upload of government ID

- A bank card or credit card authorisation

- A manual review

Platforms have been told the appeals process must be transparent and accessible.

Will the Ban Work Immediately?

The government has acknowledged that full enforcement will take time. The eSafety Commissioner has warned the public not to expect the ban to be “perfect on day one”, and that platforms will be given a reasonable period to roll out their age-verification systems.

How Does Australia Compare With the EU and UK?

Australia is the first country to introduce an outright ban for under-16s, but it is not alone in tightening online protections for young people. The European Union has already adopted new rules requiring stricter age verification on platforms accessible to minors, alongside limits on personalised advertising, high-privacy defaults and greater transparency from tech companies.

The UK’s Online Safety Act moves in a similar direction, although it stops short of a full ban. Unlike Australia, the UK has not set a blanket minimum age for social media use. However, with both Australia and the EU escalating requirements, some British policymakers are calling for mandatory age-verification checks in the UK as well.

Young Minds’ Take on Australia’s Big Move

Young Minds isn’t sitting on the sidelines of this debate. Our founder made that clear in a LinkedIn post that’s now getting a lot of attention.

Nino said in a recent LinkedIn post:

What Australia did this week is the first real attempt to pull the brakes on a system that has been running over our children for years.

Australia’s decision sends a clear message that children’s safety comes first, something Young Minds App strongly supports. The real shift, as our founder highlights, is about courage: the courage to move first, face criticism, and prioritise children over convenience.

What This Means for Families

The Australian model represents a decisive shift in global online safety regulation. But the ban alone cannot replace the need for digital literacy, emotional resilience and healthy boundaries at home.

Whether the UK follows this model or not, age verification is becoming a standard part of online life. Children growing up today will face identity checks for many online services that were previously open to anyone.

For parents, this is a moment to rethink digital readiness, not just digital restriction. As governments build structural protections, families still play the most important role: helping children understand risk, make good decisions, and eventually manage the digital world independently.

And this is where free tools like the Young Minds Safety Assistant become invaluable. Parents can quickly check whether an app, website, or platform is safe for their child, understanding not just if it’s risky, but why. This empowers families to make informed decisions, have better conversations, and build trust without relying on guesswork or fear.

Parents also ask:

What is the legal age for social media in Australia now?

The minimum age is now 16 for all platforms included in the ban. Anyone under 16 must have their account deactivated.

How does Australia’s age verification system work?

Platforms use a mix of AI facial age estimation, behavioural analysis, date-of-birth checks, device signals and human review. ID can be used in appeals but cannot be the only method.

Could the UK introduce a similar rule?

It is possible. The UK’s Online Safety Act already requires stronger protections for minors, and several MPs have argued that Australia’s age verification approach should be considered.