Australia’s New Digital Regulation: Under‑16 Social Media Ban

How It Started

In Australia, on 10 December 2025, social media users under 16 began receiving notifications informing them that their accounts would be restricted or deactivated. This marked the start of a new era of digital regulation, as the country became the first in the world to enforce a nationwide ban preventing anyone under 16 from holding an account on major social media platforms.

The move requires social media companies to verify the age of every user in Australia accurately and repeatedly, signaling a shift away from self-reported birthdays toward multi-layered digital age verification systems. This landmark policy has sparked global debate, with parents, researchers, and the tech industry discussing its implications for privacy, childhood autonomy, and online safety.

Why It Is Important

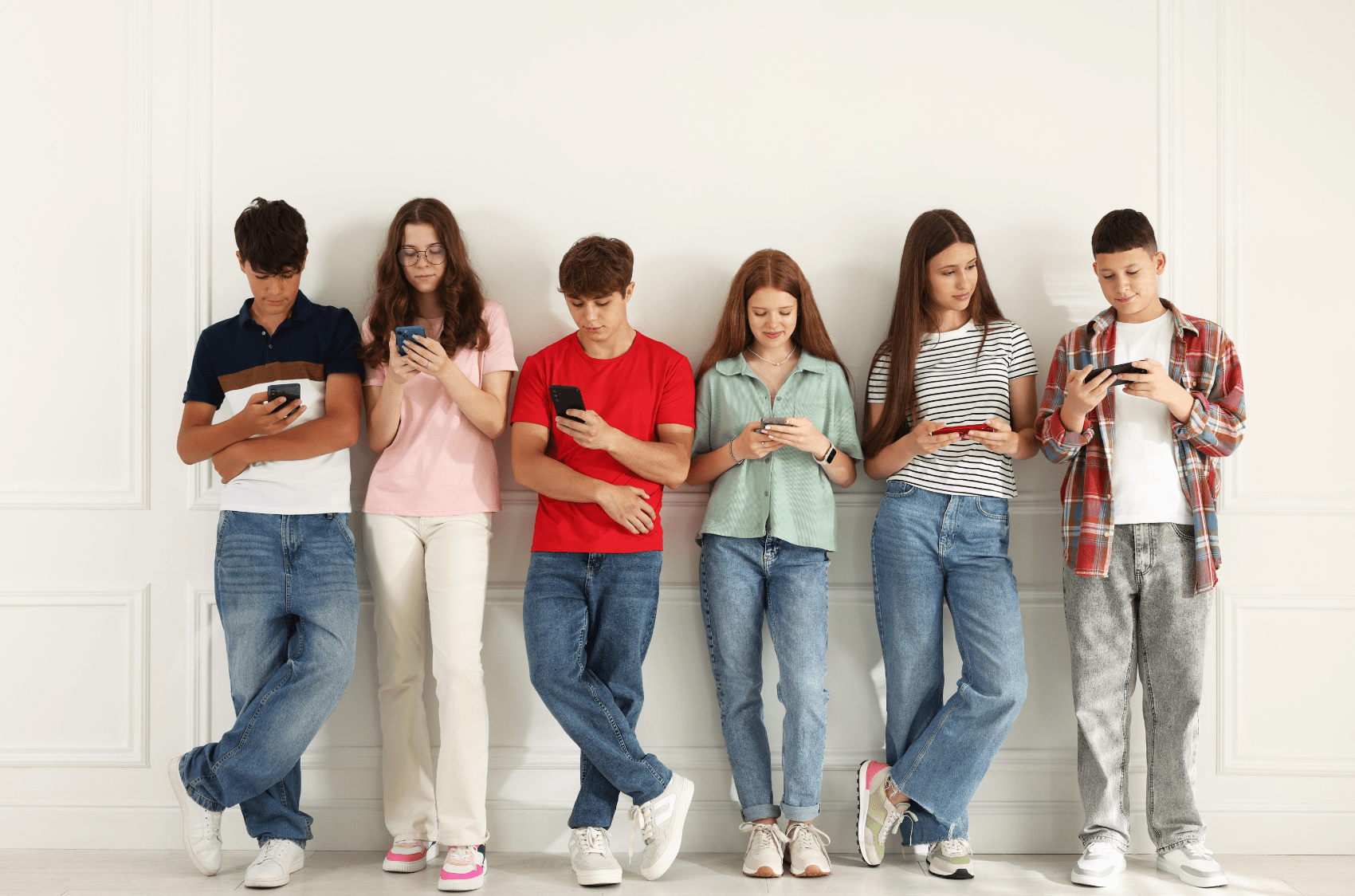

This ban tackles a simple but profound problem: platforms built on addictive algorithms and surveillance‑driven advertising have been profiting from kids without being accountable for the harms that come with early exposure to social media.

What the law does:

- Platforms face penalties of up to $49.5 million if they fail to take “reasonable steps” to prevent under‑16s from maintaining accounts.

- Major platforms, including Facebook, Instagram, TikTok, Snapchat, YouTube, X, Reddit, Twitch, and Kick — must comply.

- Exemptions apply to some educational and messaging services such as WhatsApp, Roblox, Discord, Google Classroom, and YouTube Kids.

- Age verification must use multi‑layered methods like AI facial age estimation, behavioural analysis, date‑of‑birth audits, device signals, and human review, with ID used only in appeals.

Behind the headlines, hundreds of thousands of young Australians have already been impacted, with platforms preparing to remove or suspend accounts under 16.

Courage Over Convenience

Some news outlets have called Australia’s under‑16 social media ban disruptive — the Financial Times even labeled it “completely wrong”, but that misses the bigger picture. Change of this scale was never going to be smooth or barrier‑free. Real transformation is rarely tidy.

What Australia has done is the first real attempt to pull the brakes on a system that has been running over our children for years. The government didn’t take the easy path. They did the hard thing first. By introducing a nationwide ban and requiring multi‑layered age verification, they have sent a clear message: companies can no longer profit from children without being accountable for the consequences.

This is what truly matters, not whether the first step is perfect, but that someone finally had the courage to take it. Of course, the rollout won’t be flawless. Nothing this big ever is. But for the first time, Big Tech is being told clearly: you don’t get to profit from children without being accountable for the impact. And that is a milestone worth acknowledging.

What Global Voices Are Saying about Social Media Ban

Australia’s decision has drawn reactions from high‑profile public figures and advocates around the world, not just politicians, but voices with real reach and influence:

- Oprah Winfrey have also articulated support for age limits on social media, suggesting that restricting access could improve young people’s well‑being and reduce harmful exposure to addictive platforms.

- Jonathan Haidt, social psychologist and author, tweeted:“The Australia social media age limit policy is the most significant measure to protect children from social media harms, and other countries are already planning similar measures.”

- Scott Galloway has argued publicly that there should be no social media under the age of 16, noting that tech companies are extremely effective at capturing attention and that childhood online poses unique dangers.

Across the world, this policy has sparked conversation, from calls for similar frameworks to skepticism about enforceability — but it’s undeniable that Australia’s action has become a catalyst for global debate.

Similar Laws in Other Countries

- European Union (EU): The EU has implemented stricter age verification measures, limits on personalised advertising for minors, default high-privacy settings, and greater transparency requirements for platforms. These rules were discussed and debated in the European Parliament, reflecting a strong political commitment to online child safety.

- United Kingdom (UK): The UK’s Online Safety Act strengthens protections for children online, including content moderation duties and safety measures for platforms. While it does not set a blanket age‑based ban like Australia, some UK policymakers have openly discussed mandatory age verification systems inspired by the Australian model.

These international efforts show that governments are no longer content to let platforms self‑regulate — whether through bans, verification requirements, or stricter safety duties.

What This Means for Families

The Australian model represents a decisive shift in global online safety regulation, but a ban alone is not a silver bullet.

Parents and caregivers should continue prioritising:

- Digital literacy — helping children understand how platforms work.

- Emotional resilience — supporting kids in navigating online pressures.

- Healthy boundaries — fostering balanced digital habits.

Tools like the Young Minds Safety Assistant help families assess whether an app or platform is appropriate, understanding not just if it’s risky but why. This empowers conversations and supports informed decision‑making — far beyond compliance checklists.

It’s free to use and helps parents instantly check whether apps are safe for children, so you can make confident decisions before downloading.

Quick summary:

- Legal social media age in Australia: 16.

- Verification framework: Multi‑layered methods with appeal options.

- Global context: Other countries are tightening protections, with the EU and UK leading on safety measures, and calls for age verification rising worldwide.

If this helped you better understand how Australia’s new under-16s social media ban impacts app safety, share it with another parent who might find it useful.

Parents also ask:

What is the new social media age limit in Australia?

The minimum age for social media accounts in Australia is now 16. Anyone under 16 must have their account deactivated or restricted.

When did the ban come into effect?

The law came into force on 10 December 2025.

Which platforms are not covered?

Platforms like Roblox, Pinterest, Discord, WhatsApp, GitHub, Steam, Google Classroom, Messenger, and LinkedIn are excluded because they are considered educational or low-risk.

How does age verification work?

Platforms must use a multi-layered approach, including:

AI facial age estimation

Behavioural analysis (typing, scrolling, interaction patterns)

Birthdate audits

Device and account signals

Human review

Government-issued ID can only be used for appeals.